Klasifikace Elektrokardiogramu (EKG) - díl třetí a poslední

Kombinované modely

Další oblastí, kterou bych chtěl ověřit, je kombinace výše vytvořených modelů pro samostatné vstupní datové sady do jednoho modelu.

Nemá cenu to nějak blíže rozebírat, rovnou do toho …

Kombinovaný model pro metadata X a EKG křivky Y

Vytvořím model, kdy výstupy z vrstev X a Y jsou spojeny do jedné dimenze ve vrstvě Concatenate :

In [41]:

def create_model04(X_shape, Y_shape, Z_shape):

X_inputs = keras.Input(X_shape[1:], name='X_inputs')

Y_inputs = keras.Input(Y_shape[1:], name='Y_inputs')

X = create_X_model(X_inputs)

Y = create_Y_model(Y_inputs, filters=(64, 128, 256), kernel_size=(7, 3, 3))

X = keras.layers.Concatenate(name='Z_concat')([X, Y])

X = keras.layers.Dense(128, activation='relu', name='Z_dense_1')(X)

X = keras.layers.Dense(128, activation='relu', name='Z_dense_2')(X)

X = keras.layers.Dropout(0.5, name='Z_drop_1')(X)

outputs = keras.layers.Dense(Z_shape[-1], activation='sigmoid', name='Z_outputs')(X)

model = keras.Model(inputs=[X_inputs, Y_inputs], outputs=outputs, name='X_Y_model')

return model

Nyní můžu model přeložit:

In [42]:

model04 = create_model04(X_train.shape, Y_train.shape, Z_train.shape) model04.compile(optimizer='adam', loss='binary_crossentropy', metrics=['binary_accuracy', 'Precision', 'Recall']) model04.summary() Model: "X_Y_model" __________________________________________________________________________________________________ Layer (type) Output Shape Param # Connected to ================================================================================================== Y_inputs (InputLayer) [(None, 1000, 12)] 0 [] Y_norm (Normalization) (None, 1000, 12) 25 ['Y_inputs[0][0]'] Y_conv_1 (Conv1D) (None, 1000, 64) 5440 ['Y_norm[0][0]'] Y_norm_1 (BatchNormalizati (None, 1000, 64) 256 ['Y_conv_1[0][0]'] on) Y_relu_1 (ReLU) (None, 1000, 64) 0 ['Y_norm_1[0][0]'] Y_pool_1 (MaxPooling1D) (None, 500, 64) 0 ['Y_relu_1[0][0]'] Y_conv_2 (Conv1D) (None, 500, 128) 24704 ['Y_pool_1[0][0]'] Y_norm_2 (BatchNormalizati (None, 500, 128) 512 ['Y_conv_2[0][0]'] on) Y_relu_2 (ReLU) (None, 500, 128) 0 ['Y_norm_2[0][0]'] X_inputs (InputLayer) [(None, 7)] 0 [] Y_pool_2 (MaxPooling1D) (None, 250, 128) 0 ['Y_relu_2[0][0]'] X_norm (Normalization) (None, 7) 15 ['X_inputs[0][0]'] Y_conv_3 (Conv1D) (None, 250, 256) 98560 ['Y_pool_2[0][0]'] X_dense_1 (Dense) (None, 32) 256 ['X_norm[0][0]'] Y_norm_3 (BatchNormalizati (None, 250, 256) 1024 ['Y_conv_3[0][0]'] on) X_drop_1 (Dropout) (None, 32) 0 ['X_dense_1[0][0]'] Y_relu_3 (ReLU) (None, 250, 256) 0 ['Y_norm_3[0][0]'] X_dense_2 (Dense) (None, 32) 1056 ['X_drop_1[0][0]'] Y_aver (GlobalAveragePooli (None, 256) 0 ['Y_relu_3[0][0]'] ng1D) X_drop_2 (Dropout) (None, 32) 0 ['X_dense_2[0][0]'] Y_drop (Dropout) (None, 256) 0 ['Y_aver[0][0]'] Z_concat (Concatenate) (None, 288) 0 ['X_drop_2[0][0]', 'Y_drop[0][0]'] Z_dense_1 (Dense) (None, 128) 36992 ['Z_concat[0][0]'] Z_dense_2 (Dense) (None, 128) 16512 ['Z_dense_1[0][0]'] Z_drop_1 (Dropout) (None, 128) 0 ['Z_dense_2[0][0]'] Z_outputs (Dense) (None, 5) 645 ['Z_drop_1[0][0]'] ================================================================================================== Total params: 185997 (726.56 KB) Trainable params: 185061 (722.89 KB) Non-trainable params: 936 (3.66 KB) __________________________________________________________________________________________________

A rovnou jej trénovat:

In [43]:

MODEL_CHECKPOINT = '/kaggle/working/model/model04.ckpt' callbacks_list = [ keras.callbacks.EarlyStopping(monitor='val_binary_accuracy', patience=10), keras.callbacks.ModelCheckpoint(filepath=MODEL_CHECKPOINT, monitor='val_binary_accuracy', save_best_only=True) ] history = model04.fit([X_train, Y_train], Z_train, epochs=100, batch_size=32, callbacks=callbacks_list, validation_data=([X_valid, Y_valid], Z_valid)) model04 = keras.models.load_model(MODEL_CHECKPOINT) model04.save('/kaggle/working/model/model04.keras') Epoch 1/100 546/546 [==============================] - 14s 17ms/step - loss: 0.5135 - binary_accuracy: 0.7760 - precision: 0.5898 - recall: 0.3968 - val_loss: 0.3685 - val_binary_accuracy: 0.8338 - val_precision: 0.7517 - val_recall: 0.5227 Epoch 2/100 546/546 [==============================] - 8s 15ms/step - loss: 0.3815 - binary_accuracy: 0.8339 - precision: 0.7225 - recall: 0.5655 - val_loss: 0.3438 - val_binary_accuracy: 0.8505 - val_precision: 0.7462 - val_recall: 0.6293 Epoch 3/100 546/546 [==============================] - 6s 10ms/step - loss: 0.3520 - binary_accuracy: 0.8516 - precision: 0.7547 - recall: 0.6187 - val_loss: 0.3874 - val_binary_accuracy: 0.8459 - val_precision: 0.7479 - val_recall: 0.5990 Epoch 4/100 546/546 [==============================] - 9s 17ms/step - loss: 0.3346 - binary_accuracy: 0.8622 - precision: 0.7760 - recall: 0.6454 - val_loss: 0.3259 - val_binary_accuracy: 0.8625 - val_precision: 0.7736 - val_recall: 0.6532 Epoch 5/100 546/546 [==============================] - 8s 15ms/step - loss: 0.3222 - binary_accuracy: 0.8681 - precision: 0.7869 - recall: 0.6616 - val_loss: 0.3106 - val_binary_accuracy: 0.8656 - val_precision: 0.7798 - val_recall: 0.6607 Epoch 6/100 546/546 [==============================] - 8s 15ms/step - loss: 0.3122 - binary_accuracy: 0.8732 - precision: 0.7973 - recall: 0.6739 - val_loss: 0.3083 - val_binary_accuracy: 0.8711 - val_precision: 0.7915 - val_recall: 0.6732 Epoch 7/100 546/546 [==============================] - 6s 11ms/step - loss: 0.3009 - binary_accuracy: 0.8787 - precision: 0.8079 - recall: 0.6874 - val_loss: 0.3148 - val_binary_accuracy: 0.8711 - val_precision: 0.8011 - val_recall: 0.6597 Epoch 8/100 546/546 [==============================] - 9s 16ms/step - loss: 0.2972 - binary_accuracy: 0.8790 - precision: 0.8053 - recall: 0.6928 - val_loss: 0.2975 - val_binary_accuracy: 0.8784 - val_precision: 0.7873 - val_recall: 0.7185 Epoch 9/100 546/546 [==============================] - 8s 15ms/step - loss: 0.2931 - binary_accuracy: 0.8819 - precision: 0.8113 - recall: 0.6991 - val_loss: 0.2907 - val_binary_accuracy: 0.8847 - val_precision: 0.7993 - val_recall: 0.7331 Epoch 10/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2901 - binary_accuracy: 0.8836 - precision: 0.8140 - recall: 0.7039 - val_loss: 0.3016 - val_binary_accuracy: 0.8789 - val_precision: 0.7963 - val_recall: 0.7071 Epoch 11/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2828 - binary_accuracy: 0.8865 - precision: 0.8207 - recall: 0.7097 - val_loss: 0.3254 - val_binary_accuracy: 0.8627 - val_precision: 0.7449 - val_recall: 0.7042 Epoch 12/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2825 - binary_accuracy: 0.8870 - precision: 0.8217 - recall: 0.7108 - val_loss: 0.2861 - val_binary_accuracy: 0.8844 - val_precision: 0.7942 - val_recall: 0.7392 Epoch 13/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2783 - binary_accuracy: 0.8888 - precision: 0.8221 - recall: 0.7194 - val_loss: 0.2960 - val_binary_accuracy: 0.8804 - val_precision: 0.7880 - val_recall: 0.7281 Epoch 14/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2737 - binary_accuracy: 0.8901 - precision: 0.8244 - recall: 0.7225 - val_loss: 0.2795 - val_binary_accuracy: 0.8847 - val_precision: 0.8060 - val_recall: 0.7232 Epoch 15/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2725 - binary_accuracy: 0.8906 - precision: 0.8227 - recall: 0.7273 - val_loss: 0.2797 - val_binary_accuracy: 0.8842 - val_precision: 0.8038 - val_recall: 0.7235 Epoch 16/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2704 - binary_accuracy: 0.8914 - precision: 0.8251 - recall: 0.7282 - val_loss: 0.2810 - val_binary_accuracy: 0.8824 - val_precision: 0.8004 - val_recall: 0.7196 Epoch 17/100 546/546 [==============================] - 9s 17ms/step - loss: 0.2689 - binary_accuracy: 0.8917 - precision: 0.8273 - recall: 0.7266 - val_loss: 0.2885 - val_binary_accuracy: 0.8883 - val_precision: 0.8005 - val_recall: 0.7499 Epoch 18/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2666 - binary_accuracy: 0.8931 - precision: 0.8306 - recall: 0.7291 - val_loss: 0.2870 - val_binary_accuracy: 0.8793 - val_precision: 0.7842 - val_recall: 0.7285 Epoch 19/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2640 - binary_accuracy: 0.8943 - precision: 0.8310 - recall: 0.7349 - val_loss: 0.3127 - val_binary_accuracy: 0.8720 - val_precision: 0.7847 - val_recall: 0.6878 Epoch 20/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2588 - binary_accuracy: 0.8959 - precision: 0.8322 - recall: 0.7408 - val_loss: 0.2906 - val_binary_accuracy: 0.8837 - val_precision: 0.7957 - val_recall: 0.7335 Epoch 21/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2559 - binary_accuracy: 0.8984 - precision: 0.8398 - recall: 0.7431 - val_loss: 0.2920 - val_binary_accuracy: 0.8824 - val_precision: 0.8087 - val_recall: 0.7075 Epoch 22/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2560 - binary_accuracy: 0.8974 - precision: 0.8380 - recall: 0.7406 - val_loss: 0.2740 - val_binary_accuracy: 0.8868 - val_precision: 0.8063 - val_recall: 0.7335 Epoch 23/100 546/546 [==============================] - 9s 16ms/step - loss: 0.2505 - binary_accuracy: 0.9002 - precision: 0.8437 - recall: 0.7469 - val_loss: 0.2773 - val_binary_accuracy: 0.8897 - val_precision: 0.8118 - val_recall: 0.7403 Epoch 24/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2498 - binary_accuracy: 0.9013 - precision: 0.8446 - recall: 0.7507 - val_loss: 0.2842 - val_binary_accuracy: 0.8846 - val_precision: 0.8030 - val_recall: 0.7271 Epoch 25/100 546/546 [==============================] - 8s 15ms/step - loss: 0.2488 - binary_accuracy: 0.9009 - precision: 0.8454 - recall: 0.7479 - val_loss: 0.2716 - val_binary_accuracy: 0.8908 - val_precision: 0.8202 - val_recall: 0.7339 Epoch 26/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2451 - binary_accuracy: 0.9033 - precision: 0.8471 - recall: 0.7572 - val_loss: 0.2892 - val_binary_accuracy: 0.8806 - val_precision: 0.7882 - val_recall: 0.7289 Epoch 27/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2444 - binary_accuracy: 0.9035 - precision: 0.8489 - recall: 0.7559 - val_loss: 0.2891 - val_binary_accuracy: 0.8891 - val_precision: 0.8185 - val_recall: 0.7274 Epoch 28/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2417 - binary_accuracy: 0.9031 - precision: 0.8492 - recall: 0.7534 - val_loss: 0.2857 - val_binary_accuracy: 0.8854 - val_precision: 0.8087 - val_recall: 0.7224 Epoch 29/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2411 - binary_accuracy: 0.9043 - precision: 0.8499 - recall: 0.7583 - val_loss: 0.2764 - val_binary_accuracy: 0.8846 - val_precision: 0.8126 - val_recall: 0.7132 Epoch 30/100 546/546 [==============================] - 8s 15ms/step - loss: 0.2393 - binary_accuracy: 0.9059 - precision: 0.8523 - recall: 0.7629 - val_loss: 0.2731 - val_binary_accuracy: 0.8941 - val_precision: 0.8245 - val_recall: 0.7442 Epoch 31/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2392 - binary_accuracy: 0.9050 - precision: 0.8519 - recall: 0.7592 - val_loss: 0.2821 - val_binary_accuracy: 0.8893 - val_precision: 0.8184 - val_recall: 0.7285 Epoch 32/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2359 - binary_accuracy: 0.9073 - precision: 0.8556 - recall: 0.7655 - val_loss: 0.2758 - val_binary_accuracy: 0.8898 - val_precision: 0.8148 - val_recall: 0.7364 Epoch 33/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2349 - binary_accuracy: 0.9065 - precision: 0.8513 - recall: 0.7671 - val_loss: 0.2871 - val_binary_accuracy: 0.8887 - val_precision: 0.8086 - val_recall: 0.7399 Epoch 34/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2340 - binary_accuracy: 0.9074 - precision: 0.8569 - recall: 0.7644 - val_loss: 0.2672 - val_binary_accuracy: 0.8906 - val_precision: 0.8169 - val_recall: 0.7371 Epoch 35/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2325 - binary_accuracy: 0.9076 - precision: 0.8578 - recall: 0.7640 - val_loss: 0.2790 - val_binary_accuracy: 0.8885 - val_precision: 0.8135 - val_recall: 0.7314 Epoch 36/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2312 - binary_accuracy: 0.9076 - precision: 0.8538 - recall: 0.7690 - val_loss: 0.2766 - val_binary_accuracy: 0.8876 - val_precision: 0.8101 - val_recall: 0.7321 Epoch 37/100 546/546 [==============================] - 9s 17ms/step - loss: 0.2272 - binary_accuracy: 0.9096 - precision: 0.8583 - recall: 0.7730 - val_loss: 0.2598 - val_binary_accuracy: 0.8942 - val_precision: 0.8175 - val_recall: 0.7545 Epoch 38/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2274 - binary_accuracy: 0.9102 - precision: 0.8599 - recall: 0.7736 - val_loss: 0.2663 - val_binary_accuracy: 0.8931 - val_precision: 0.8220 - val_recall: 0.7428 Epoch 39/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2275 - binary_accuracy: 0.9103 - precision: 0.8606 - recall: 0.7731 - val_loss: 0.2714 - val_binary_accuracy: 0.8907 - val_precision: 0.8173 - val_recall: 0.7374 Epoch 40/100 546/546 [==============================] - 8s 15ms/step - loss: 0.2252 - binary_accuracy: 0.9110 - precision: 0.8632 - recall: 0.7733 - val_loss: 0.2699 - val_binary_accuracy: 0.8943 - val_precision: 0.8162 - val_recall: 0.7570 Epoch 41/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2234 - binary_accuracy: 0.9105 - precision: 0.8613 - recall: 0.7732 - val_loss: 0.2834 - val_binary_accuracy: 0.8888 - val_precision: 0.8128 - val_recall: 0.7342 Epoch 42/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2233 - binary_accuracy: 0.9117 - precision: 0.8642 - recall: 0.7755 - val_loss: 0.2745 - val_binary_accuracy: 0.8894 - val_precision: 0.7991 - val_recall: 0.7578 Epoch 43/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2197 - binary_accuracy: 0.9123 - precision: 0.8647 - recall: 0.7776 - val_loss: 0.2963 - val_binary_accuracy: 0.8898 - val_precision: 0.8227 - val_recall: 0.7253 Epoch 44/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2189 - binary_accuracy: 0.9140 - precision: 0.8667 - recall: 0.7829 - val_loss: 0.2891 - val_binary_accuracy: 0.8875 - val_precision: 0.8061 - val_recall: 0.7371 Epoch 45/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2194 - binary_accuracy: 0.9130 - precision: 0.8654 - recall: 0.7798 - val_loss: 0.2812 - val_binary_accuracy: 0.8907 - val_precision: 0.8151 - val_recall: 0.7406 Epoch 46/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2186 - binary_accuracy: 0.9131 - precision: 0.8636 - recall: 0.7826 - val_loss: 0.2920 - val_binary_accuracy: 0.8861 - val_precision: 0.8101 - val_recall: 0.7242 Epoch 47/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2157 - binary_accuracy: 0.9142 - precision: 0.8683 - recall: 0.7817 - val_loss: 0.2746 - val_binary_accuracy: 0.8889 - val_precision: 0.8192 - val_recall: 0.7257 Epoch 48/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2153 - binary_accuracy: 0.9145 - precision: 0.8694 - recall: 0.7821 - val_loss: 0.2672 - val_binary_accuracy: 0.8924 - val_precision: 0.8139 - val_recall: 0.7506 Epoch 49/100 546/546 [==============================] - 6s 10ms/step - loss: 0.2145 - binary_accuracy: 0.9159 - precision: 0.8715 - recall: 0.7856 - val_loss: 0.2997 - val_binary_accuracy: 0.8899 - val_precision: 0.8043 - val_recall: 0.7524 Epoch 50/100 546/546 [==============================] - 6s 11ms/step - loss: 0.2123 - binary_accuracy: 0.9152 - precision: 0.8697 - recall: 0.7849 - val_loss: 0.2770 - val_binary_accuracy: 0.8938 - val_precision: 0.8105 - val_recall: 0.7628

Průběh trénování vypadal zhruba takto:

In [44]:

sns.relplot(data=pd.DataFrame(history.history), kind='line', height=4, aspect=4)

plt.show()

Na závěr kapitoly si můžu model vyhodnotit proti testovacím sadám dat pro X a Y:

In [45]:

Z_pred = np.around(model04.predict([X_test, Y_test], verbose=0))

metrics = pd.concat([metrics.drop(model04.name, level=0, errors='ignore'), model_quality_reporting(Z_test, Z_pred, model_name=model04.name)])

precision recall f1-score support

NORM 0.83 0.92 0.87 964

MI 0.89 0.66 0.76 553

STTC 0.79 0.71 0.74 523

CD 0.76 0.72 0.74 498

HYP 0.73 0.45 0.56 263

micro avg 0.81 0.75 0.78 2801

macro avg 0.80 0.69 0.74 2801

weighted avg 0.81 0.75 0.77 2801

samples avg 0.79 0.77 0.76 2801

Posouzení výsledku modelu v porovnání s ostatními se nechám až na závěr tohoto článku.

Kombinovaný model pro metadata X, EKG křivky Y a spektrogramy S

V tomto pokusu zkombinuji všechny tři modely pro vstupní data do jednoho.

In [46]:

def create_model05(X_shape, Y_shape, S_shape, Z_shape):

X_inputs = keras.Input(X_shape[1:], name='X_inputs')

Y_inputs = keras.Input(Y_shape[1:], name='Y_inputs')

S_inputs = keras.Input(S_shape[1:], name='S_inputs')

X = create_X_model(X_inputs)

Y = create_Y_model(Y_inputs, filters=(64, 128, 256), kernel_size=(7, 3, 3))

S = create_S_model(S_inputs, filters=(32, 64, 128), kernel_size=((3, 3), (3, 3), (3, 3)))

X = keras.layers.Concatenate(name='Z_concat')([X, Y, S])

X = keras.layers.Dense(128, activation='relu', name='Z_dense_1')(X)

X = keras.layers.Dense(128, activation='relu', name='Z_dense_2')(X)

X = keras.layers.Dropout(0.5, name='Z_drop_1')(X)

outputs = keras.layers.Dense(Z_shape[-1], activation='sigmoid', name='Z_outputs')(X)

model = keras.Model(inputs=[X_inputs, Y_inputs, S_inputs], outputs=outputs, name='X_Y_S_model')

return model

A nyní kompilace modelu a následně trénování tak, jak jsem to dělal doposud u všech modelů:

In [47]:

model05 = create_model05(X_train.shape, Y_train.shape, S_train.shape, Z_train.shape) model05.compile(optimizer='adam', loss='binary_crossentropy', metrics=['binary_accuracy', 'Precision', 'Recall']) model05.summary() Model: "X_Y_S_model" __________________________________________________________________________________________________ Layer (type) Output Shape Param # Connected to ================================================================================================== S_inputs (InputLayer) [(None, 12, 31, 34, 1)] 0 [] S_norm (Normalization) (None, 12, 31, 34, 1) 3 ['S_inputs[0][0]'] tf.split_1 (TFOpLambda) [(None, 1, 31, 34, 1), 0 ['S_norm[0][0]'] (None, 1, 31, 34, 1), (None, 1, 31, 34, 1), (None, 1, 31, 34, 1), (None, 1, 31, 34, 1), (None, 1, 31, 34, 1), (None, 1, 31, 34, 1), (None, 1, 31, 34, 1), (None, 1, 31, 34, 1), (None, 1, 31, 34, 1), (None, 1, 31, 34, 1), (None, 1, 31, 34, 1)] tf.compat.v1.squeeze_12 (T (None, 31, 34, 1) 0 ['tf.split_1[0][0]'] FOpLambda) tf.compat.v1.squeeze_13 (T (None, 31, 34, 1) 0 ['tf.split_1[0][1]'] FOpLambda) tf.compat.v1.squeeze_14 (T (None, 31, 34, 1) 0 ['tf.split_1[0][2]'] FOpLambda) tf.compat.v1.squeeze_15 (T (None, 31, 34, 1) 0 ['tf.split_1[0][3]'] FOpLambda) tf.compat.v1.squeeze_16 (T (None, 31, 34, 1) 0 ['tf.split_1[0][4]'] FOpLambda) tf.compat.v1.squeeze_17 (T (None, 31, 34, 1) 0 ['tf.split_1[0][5]'] FOpLambda) tf.compat.v1.squeeze_18 (T (None, 31, 34, 1) 0 ['tf.split_1[0][6]'] FOpLambda) tf.compat.v1.squeeze_19 (T (None, 31, 34, 1) 0 ['tf.split_1[0][7]'] FOpLambda) tf.compat.v1.squeeze_20 (T (None, 31, 34, 1) 0 ['tf.split_1[0][8]'] FOpLambda) tf.compat.v1.squeeze_21 (T (None, 31, 34, 1) 0 ['tf.split_1[0][9]'] FOpLambda) tf.compat.v1.squeeze_22 (T (None, 31, 34, 1) 0 ['tf.split_1[0][10]'] FOpLambda) tf.compat.v1.squeeze_23 (T (None, 31, 34, 1) 0 ['tf.split_1[0][11]'] FOpLambda) S_conv_0_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_12[0][0 ]'] S_conv_1_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_13[0][0 ]'] S_conv_2_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_14[0][0 ]'] S_conv_3_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_15[0][0 ]'] S_conv_4_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_16[0][0 ]'] S_conv_5_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_17[0][0 ]'] S_conv_6_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_18[0][0 ]'] S_conv_7_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_19[0][0 ]'] S_conv_8_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_20[0][0 ]'] S_conv_9_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_21[0][0 ]'] S_conv_10_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_22[0][0 ]'] S_conv_11_1 (Conv2D) (None, 31, 34, 32) 320 ['tf.compat.v1.squeeze_23[0][0 ]'] Y_inputs (InputLayer) [(None, 1000, 12)] 0 [] S_norm_0_1 (BatchNormaliza (None, 31, 34, 32) 128 ['S_conv_0_1[0][0]'] tion) S_norm_1_1 (BatchNormaliza (None, 31, 34, 32) 128 ['S_conv_1_1[0][0]'] tion) S_norm_2_1 (BatchNormaliza (None, 31, 34, 32) 128 ['S_conv_2_1[0][0]'] tion) S_norm_3_1 (BatchNormaliza (None, 31, 34, 32) 128 ['S_conv_3_1[0][0]'] tion) S_norm_4_1 (BatchNormaliza (None, 31, 34, 32) 128 ['S_conv_4_1[0][0]'] tion) S_norm_5_1 (BatchNormaliza (None, 31, 34, 32) 128 ['S_conv_5_1[0][0]'] tion) S_norm_6_1 (BatchNormaliza (None, 31, 34, 32) 128 ['S_conv_6_1[0][0]'] tion) S_norm_7_1 (BatchNormaliza (None, 31, 34, 32) 128 ['S_conv_7_1[0][0]'] tion) S_norm_8_1 (BatchNormaliza (None, 31, 34, 32) 128 ['S_conv_8_1[0][0]'] tion) S_norm_9_1 (BatchNormaliza (None, 31, 34, 32) 128 ['S_conv_9_1[0][0]'] tion) S_norm_10_1 (BatchNormaliz (None, 31, 34, 32) 128 ['S_conv_10_1[0][0]'] ation) S_norm_11_1 (BatchNormaliz (None, 31, 34, 32) 128 ['S_conv_11_1[0][0]'] ation) Y_norm (Normalization) (None, 1000, 12) 25 ['Y_inputs[0][0]'] S_relu_0_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_0_1[0][0]'] S_relu_1_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_1_1[0][0]'] S_relu_2_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_2_1[0][0]'] S_relu_3_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_3_1[0][0]'] S_relu_4_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_4_1[0][0]'] S_relu_5_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_5_1[0][0]'] S_relu_6_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_6_1[0][0]'] S_relu_7_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_7_1[0][0]'] S_relu_8_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_8_1[0][0]'] S_relu_9_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_9_1[0][0]'] S_relu_10_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_10_1[0][0]'] S_relu_11_1 (ReLU) (None, 31, 34, 32) 0 ['S_norm_11_1[0][0]'] Y_conv_1 (Conv1D) (None, 1000, 64) 5440 ['Y_norm[0][0]'] S_pool_0_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_0_1[0][0]'] S_pool_1_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_1_1[0][0]'] S_pool_2_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_2_1[0][0]'] S_pool_3_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_3_1[0][0]'] S_pool_4_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_4_1[0][0]'] S_pool_5_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_5_1[0][0]'] S_pool_6_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_6_1[0][0]'] S_pool_7_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_7_1[0][0]'] S_pool_8_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_8_1[0][0]'] S_pool_9_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_9_1[0][0]'] S_pool_10_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_10_1[0][0]'] S_pool_11_1 (MaxPooling2D) (None, 15, 17, 32) 0 ['S_relu_11_1[0][0]'] Y_norm_1 (BatchNormalizati (None, 1000, 64) 256 ['Y_conv_1[0][0]'] on) S_conv_0_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_0_1[0][0]'] S_conv_1_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_1_1[0][0]'] S_conv_2_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_2_1[0][0]'] S_conv_3_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_3_1[0][0]'] S_conv_4_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_4_1[0][0]'] S_conv_5_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_5_1[0][0]'] S_conv_6_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_6_1[0][0]'] S_conv_7_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_7_1[0][0]'] S_conv_8_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_8_1[0][0]'] S_conv_9_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_9_1[0][0]'] S_conv_10_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_10_1[0][0]'] S_conv_11_2 (Conv2D) (None, 15, 17, 64) 18496 ['S_pool_11_1[0][0]'] Y_relu_1 (ReLU) (None, 1000, 64) 0 ['Y_norm_1[0][0]'] S_norm_0_2 (BatchNormaliza (None, 15, 17, 64) 256 ['S_conv_0_2[0][0]'] tion) S_norm_1_2 (BatchNormaliza (None, 15, 17, 64) 256 ['S_conv_1_2[0][0]'] tion) S_norm_2_2 (BatchNormaliza (None, 15, 17, 64) 256 ['S_conv_2_2[0][0]'] tion) S_norm_3_2 (BatchNormaliza (None, 15, 17, 64) 256 ['S_conv_3_2[0][0]'] tion) S_norm_4_2 (BatchNormaliza (None, 15, 17, 64) 256 ['S_conv_4_2[0][0]'] tion) S_norm_5_2 (BatchNormaliza (None, 15, 17, 64) 256 ['S_conv_5_2[0][0]'] tion) S_norm_6_2 (BatchNormaliza (None, 15, 17, 64) 256 ['S_conv_6_2[0][0]'] tion) S_norm_7_2 (BatchNormaliza (None, 15, 17, 64) 256 ['S_conv_7_2[0][0]'] tion) S_norm_8_2 (BatchNormaliza (None, 15, 17, 64) 256 ['S_conv_8_2[0][0]'] tion) S_norm_9_2 (BatchNormaliza (None, 15, 17, 64) 256 ['S_conv_9_2[0][0]'] tion) S_norm_10_2 (BatchNormaliz (None, 15, 17, 64) 256 ['S_conv_10_2[0][0]'] ation) S_norm_11_2 (BatchNormaliz (None, 15, 17, 64) 256 ['S_conv_11_2[0][0]'] ation) Y_pool_1 (MaxPooling1D) (None, 500, 64) 0 ['Y_relu_1[0][0]'] S_relu_0_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_0_2[0][0]'] S_relu_1_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_1_2[0][0]'] S_relu_2_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_2_2[0][0]'] S_relu_3_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_3_2[0][0]'] S_relu_4_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_4_2[0][0]'] S_relu_5_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_5_2[0][0]'] S_relu_6_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_6_2[0][0]'] S_relu_7_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_7_2[0][0]'] S_relu_8_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_8_2[0][0]'] S_relu_9_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_9_2[0][0]'] S_relu_10_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_10_2[0][0]'] S_relu_11_2 (ReLU) (None, 15, 17, 64) 0 ['S_norm_11_2[0][0]'] Y_conv_2 (Conv1D) (None, 500, 128) 24704 ['Y_pool_1[0][0]'] S_pool_0_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_0_2[0][0]'] S_pool_1_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_1_2[0][0]'] S_pool_2_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_2_2[0][0]'] S_pool_3_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_3_2[0][0]'] S_pool_4_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_4_2[0][0]'] S_pool_5_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_5_2[0][0]'] S_pool_6_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_6_2[0][0]'] S_pool_7_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_7_2[0][0]'] S_pool_8_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_8_2[0][0]'] S_pool_9_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_9_2[0][0]'] S_pool_10_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_10_2[0][0]'] S_pool_11_2 (MaxPooling2D) (None, 7, 8, 64) 0 ['S_relu_11_2[0][0]'] Y_norm_2 (BatchNormalizati (None, 500, 128) 512 ['Y_conv_2[0][0]'] on) S_conv_0_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_0_2[0][0]'] S_conv_1_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_1_2[0][0]'] S_conv_2_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_2_2[0][0]'] S_conv_3_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_3_2[0][0]'] S_conv_4_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_4_2[0][0]'] S_conv_5_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_5_2[0][0]'] S_conv_6_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_6_2[0][0]'] S_conv_7_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_7_2[0][0]'] S_conv_8_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_8_2[0][0]'] S_conv_9_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_9_2[0][0]'] S_conv_10_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_10_2[0][0]'] S_conv_11_3 (Conv2D) (None, 7, 8, 128) 73856 ['S_pool_11_2[0][0]'] Y_relu_2 (ReLU) (None, 500, 128) 0 ['Y_norm_2[0][0]'] S_norm_0_3 (BatchNormaliza (None, 7, 8, 128) 512 ['S_conv_0_3[0][0]'] tion) S_norm_1_3 (BatchNormaliza (None, 7, 8, 128) 512 ['S_conv_1_3[0][0]'] tion) S_norm_2_3 (BatchNormaliza (None, 7, 8, 128) 512 ['S_conv_2_3[0][0]'] tion) S_norm_3_3 (BatchNormaliza (None, 7, 8, 128) 512 ['S_conv_3_3[0][0]'] tion) S_norm_4_3 (BatchNormaliza (None, 7, 8, 128) 512 ['S_conv_4_3[0][0]'] tion) S_norm_5_3 (BatchNormaliza (None, 7, 8, 128) 512 ['S_conv_5_3[0][0]'] tion) S_norm_6_3 (BatchNormaliza (None, 7, 8, 128) 512 ['S_conv_6_3[0][0]'] tion) S_norm_7_3 (BatchNormaliza (None, 7, 8, 128) 512 ['S_conv_7_3[0][0]'] tion) S_norm_8_3 (BatchNormaliza (None, 7, 8, 128) 512 ['S_conv_8_3[0][0]'] tion) S_norm_9_3 (BatchNormaliza (None, 7, 8, 128) 512 ['S_conv_9_3[0][0]'] tion) S_norm_10_3 (BatchNormaliz (None, 7, 8, 128) 512 ['S_conv_10_3[0][0]'] ation) S_norm_11_3 (BatchNormaliz (None, 7, 8, 128) 512 ['S_conv_11_3[0][0]'] ation) X_inputs (InputLayer) [(None, 7)] 0 [] Y_pool_2 (MaxPooling1D) (None, 250, 128) 0 ['Y_relu_2[0][0]'] S_relu_0_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_0_3[0][0]'] S_relu_1_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_1_3[0][0]'] S_relu_2_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_2_3[0][0]'] S_relu_3_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_3_3[0][0]'] S_relu_4_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_4_3[0][0]'] S_relu_5_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_5_3[0][0]'] S_relu_6_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_6_3[0][0]'] S_relu_7_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_7_3[0][0]'] S_relu_8_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_8_3[0][0]'] S_relu_9_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_9_3[0][0]'] S_relu_10_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_10_3[0][0]'] S_relu_11_3 (ReLU) (None, 7, 8, 128) 0 ['S_norm_11_3[0][0]'] X_norm (Normalization) (None, 7) 15 ['X_inputs[0][0]'] Y_conv_3 (Conv1D) (None, 250, 256) 98560 ['Y_pool_2[0][0]'] S_pool_0_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_0_3[0][0]'] S_pool_1_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_1_3[0][0]'] S_pool_2_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_2_3[0][0]'] S_pool_3_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_3_3[0][0]'] S_pool_4_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_4_3[0][0]'] S_pool_5_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_5_3[0][0]'] S_pool_6_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_6_3[0][0]'] S_pool_7_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_7_3[0][0]'] S_pool_8_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_8_3[0][0]'] S_pool_9_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_9_3[0][0]'] S_pool_10_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_10_3[0][0]'] S_pool_11_3 (MaxPooling2D) (None, 3, 4, 128) 0 ['S_relu_11_3[0][0]'] X_dense_1 (Dense) (None, 32) 256 ['X_norm[0][0]'] Y_norm_3 (BatchNormalizati (None, 250, 256) 1024 ['Y_conv_3[0][0]'] on) S_aver_0 (GlobalAveragePoo (None, 128) 0 ['S_pool_0_3[0][0]'] ling2D) S_aver_1 (GlobalAveragePoo (None, 128) 0 ['S_pool_1_3[0][0]'] ling2D) S_aver_2 (GlobalAveragePoo (None, 128) 0 ['S_pool_2_3[0][0]'] ling2D) S_aver_3 (GlobalAveragePoo (None, 128) 0 ['S_pool_3_3[0][0]'] ling2D) S_aver_4 (GlobalAveragePoo (None, 128) 0 ['S_pool_4_3[0][0]'] ling2D) S_aver_5 (GlobalAveragePoo (None, 128) 0 ['S_pool_5_3[0][0]'] ling2D) S_aver_6 (GlobalAveragePoo (None, 128) 0 ['S_pool_6_3[0][0]'] ling2D) S_aver_7 (GlobalAveragePoo (None, 128) 0 ['S_pool_7_3[0][0]'] ling2D) S_aver_8 (GlobalAveragePoo (None, 128) 0 ['S_pool_8_3[0][0]'] ling2D) S_aver_9 (GlobalAveragePoo (None, 128) 0 ['S_pool_9_3[0][0]'] ling2D) S_aver_10 (GlobalAveragePo (None, 128) 0 ['S_pool_10_3[0][0]'] oling2D) S_aver_11 (GlobalAveragePo (None, 128) 0 ['S_pool_11_3[0][0]'] oling2D) X_drop_1 (Dropout) (None, 32) 0 ['X_dense_1[0][0]'] Y_relu_3 (ReLU) (None, 250, 256) 0 ['Y_norm_3[0][0]'] tf.expand_dims_12 (TFOpLam (None, 1, 128) 0 ['S_aver_0[0][0]'] bda) tf.expand_dims_13 (TFOpLam (None, 1, 128) 0 ['S_aver_1[0][0]'] bda) tf.expand_dims_14 (TFOpLam (None, 1, 128) 0 ['S_aver_2[0][0]'] bda) tf.expand_dims_15 (TFOpLam (None, 1, 128) 0 ['S_aver_3[0][0]'] bda) tf.expand_dims_16 (TFOpLam (None, 1, 128) 0 ['S_aver_4[0][0]'] bda) tf.expand_dims_17 (TFOpLam (None, 1, 128) 0 ['S_aver_5[0][0]'] bda) tf.expand_dims_18 (TFOpLam (None, 1, 128) 0 ['S_aver_6[0][0]'] bda) tf.expand_dims_19 (TFOpLam (None, 1, 128) 0 ['S_aver_7[0][0]'] bda) tf.expand_dims_20 (TFOpLam (None, 1, 128) 0 ['S_aver_8[0][0]'] bda) tf.expand_dims_21 (TFOpLam (None, 1, 128) 0 ['S_aver_9[0][0]'] bda) tf.expand_dims_22 (TFOpLam (None, 1, 128) 0 ['S_aver_10[0][0]'] bda) tf.expand_dims_23 (TFOpLam (None, 1, 128) 0 ['S_aver_11[0][0]'] bda) X_dense_2 (Dense) (None, 32) 1056 ['X_drop_1[0][0]'] Y_aver (GlobalAveragePooli (None, 256) 0 ['Y_relu_3[0][0]'] ng1D) S_concat (Concatenate) (None, 12, 128) 0 ['tf.expand_dims_12[0][0]', 'tf.expand_dims_13[0][0]', 'tf.expand_dims_14[0][0]', 'tf.expand_dims_15[0][0]', 'tf.expand_dims_16[0][0]', 'tf.expand_dims_17[0][0]', 'tf.expand_dims_18[0][0]', 'tf.expand_dims_19[0][0]', 'tf.expand_dims_20[0][0]', 'tf.expand_dims_21[0][0]', 'tf.expand_dims_22[0][0]', 'tf.expand_dims_23[0][0]'] X_drop_2 (Dropout) (None, 32) 0 ['X_dense_2[0][0]'] Y_drop (Dropout) (None, 256) 0 ['Y_aver[0][0]'] S_flatten (Flatten) (None, 1536) 0 ['S_concat[0][0]'] Z_concat (Concatenate) (None, 1824) 0 ['X_drop_2[0][0]', 'Y_drop[0][0]', 'S_flatten[0][0]'] Z_dense_1 (Dense) (None, 128) 233600 ['Z_concat[0][0]'] Z_dense_2 (Dense) (None, 128) 16512 ['Z_dense_1[0][0]'] Z_drop_1 (Dropout) (None, 128) 0 ['Z_dense_2[0][0]'] Z_outputs (Dense) (None, 5) 645 ['Z_drop_1[0][0]'] ================================================================================================== Total params: 1505424 (5.74 MB) Trainable params: 1499109 (5.72 MB) Non-trainable params: 6315 (24.68 KB) __________________________________________________________________________________________________

In [48]:

MODEL_CHECKPOINT = '/kaggle/working/model/model05.ckpt' callbacks_list = [ keras.callbacks.EarlyStopping(monitor='val_binary_accuracy', patience=10), keras.callbacks.ModelCheckpoint(filepath=MODEL_CHECKPOINT, monitor='val_binary_accuracy', save_best_only=True) ] history = model05.fit([X_train, Y_train, S_train], Z_train, epochs=100, batch_size=32, callbacks=callbacks_list, validation_data=([X_valid, Y_valid, S_valid], Z_valid)) model05 = keras.models.load_model(MODEL_CHECKPOINT) model05.save('/kaggle/working/model/model05.keras') Epoch 1/100 546/546 [==============================] - 73s 78ms/step - loss: 0.4367 - binary_accuracy: 0.8027 - precision: 0.6567 - recall: 0.4733 - val_loss: 0.3634 - val_binary_accuracy: 0.8370 - val_precision: 0.7266 - val_recall: 0.5812 Epoch 2/100 546/546 [==============================] - 41s 75ms/step - loss: 0.3563 - binary_accuracy: 0.8468 - precision: 0.7428 - recall: 0.6099 - val_loss: 0.3341 - val_binary_accuracy: 0.8513 - val_precision: 0.7411 - val_recall: 0.6432 Epoch 3/100 546/546 [==============================] - 40s 74ms/step - loss: 0.3281 - binary_accuracy: 0.8630 - precision: 0.7700 - recall: 0.6594 - val_loss: 0.3133 - val_binary_accuracy: 0.8662 - val_precision: 0.7847 - val_recall: 0.6568 Epoch 4/100 546/546 [==============================] - 20s 37ms/step - loss: 0.3108 - binary_accuracy: 0.8724 - precision: 0.7913 - recall: 0.6782 - val_loss: 0.3220 - val_binary_accuracy: 0.8632 - val_precision: 0.7745 - val_recall: 0.6557 Epoch 5/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2937 - binary_accuracy: 0.8803 - precision: 0.8030 - recall: 0.7025 - val_loss: 0.3798 - val_binary_accuracy: 0.8473 - val_precision: 0.7033 - val_recall: 0.6968 Epoch 6/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2888 - binary_accuracy: 0.8830 - precision: 0.8116 - recall: 0.7042 - val_loss: 0.3121 - val_binary_accuracy: 0.8658 - val_precision: 0.7907 - val_recall: 0.6457 Epoch 7/100 546/546 [==============================] - 41s 76ms/step - loss: 0.2806 - binary_accuracy: 0.8865 - precision: 0.8162 - recall: 0.7160 - val_loss: 0.2973 - val_binary_accuracy: 0.8792 - val_precision: 0.8164 - val_recall: 0.6803 Epoch 8/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2742 - binary_accuracy: 0.8895 - precision: 0.8209 - recall: 0.7246 - val_loss: 0.3024 - val_binary_accuracy: 0.8788 - val_precision: 0.7843 - val_recall: 0.7253 Epoch 9/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2694 - binary_accuracy: 0.8917 - precision: 0.8264 - recall: 0.7278 - val_loss: 0.3001 - val_binary_accuracy: 0.8779 - val_precision: 0.7831 - val_recall: 0.7224 Epoch 10/100 546/546 [==============================] - 20s 36ms/step - loss: 0.2631 - binary_accuracy: 0.8948 - precision: 0.8342 - recall: 0.7329 - val_loss: 0.3032 - val_binary_accuracy: 0.8782 - val_precision: 0.7867 - val_recall: 0.7185 Epoch 11/100 546/546 [==============================] - 20s 38ms/step - loss: 0.2601 - binary_accuracy: 0.8970 - precision: 0.8354 - recall: 0.7421 - val_loss: 0.2918 - val_binary_accuracy: 0.8787 - val_precision: 0.8005 - val_recall: 0.7000 Epoch 12/100 546/546 [==============================] - 21s 38ms/step - loss: 0.2547 - binary_accuracy: 0.8981 - precision: 0.8377 - recall: 0.7446 - val_loss: 0.3232 - val_binary_accuracy: 0.8709 - val_precision: 0.8395 - val_recall: 0.6118 Epoch 13/100 546/546 [==============================] - 40s 74ms/step - loss: 0.2512 - binary_accuracy: 0.8993 - precision: 0.8397 - recall: 0.7475 - val_loss: 0.2859 - val_binary_accuracy: 0.8839 - val_precision: 0.8128 - val_recall: 0.7092 Epoch 14/100 546/546 [==============================] - 41s 76ms/step - loss: 0.2454 - binary_accuracy: 0.9016 - precision: 0.8437 - recall: 0.7533 - val_loss: 0.2838 - val_binary_accuracy: 0.8839 - val_precision: 0.8075 - val_recall: 0.7167 Epoch 15/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2376 - binary_accuracy: 0.9052 - precision: 0.8501 - recall: 0.7623 - val_loss: 0.2959 - val_binary_accuracy: 0.8769 - val_precision: 0.7828 - val_recall: 0.7174 Epoch 16/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2359 - binary_accuracy: 0.9067 - precision: 0.8521 - recall: 0.7668 - val_loss: 0.2951 - val_binary_accuracy: 0.8798 - val_precision: 0.8153 - val_recall: 0.6850 Epoch 17/100 546/546 [==============================] - 40s 74ms/step - loss: 0.2270 - binary_accuracy: 0.9094 - precision: 0.8576 - recall: 0.7726 - val_loss: 0.2973 - val_binary_accuracy: 0.8844 - val_precision: 0.7964 - val_recall: 0.7356 Epoch 18/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2248 - binary_accuracy: 0.9102 - precision: 0.8584 - recall: 0.7756 - val_loss: 0.3116 - val_binary_accuracy: 0.8782 - val_precision: 0.7734 - val_recall: 0.7403 Epoch 19/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2181 - binary_accuracy: 0.9126 - precision: 0.8630 - recall: 0.7809 - val_loss: 0.3419 - val_binary_accuracy: 0.8779 - val_precision: 0.7921 - val_recall: 0.7082 Epoch 20/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2097 - binary_accuracy: 0.9167 - precision: 0.8688 - recall: 0.7927 - val_loss: 0.3222 - val_binary_accuracy: 0.8805 - val_precision: 0.8009 - val_recall: 0.7089 Epoch 21/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2054 - binary_accuracy: 0.9184 - precision: 0.8726 - recall: 0.7960 - val_loss: 0.3747 - val_binary_accuracy: 0.8642 - val_precision: 0.7620 - val_recall: 0.6818 Epoch 22/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2005 - binary_accuracy: 0.9207 - precision: 0.8780 - recall: 0.8002 - val_loss: 0.4820 - val_binary_accuracy: 0.8082 - val_precision: 0.6178 - val_recall: 0.6547 Epoch 23/100 546/546 [==============================] - 20s 37ms/step - loss: 0.2109 - binary_accuracy: 0.9171 - precision: 0.8695 - recall: 0.7939 - val_loss: 0.4633 - val_binary_accuracy: 0.8668 - val_precision: 0.7798 - val_recall: 0.6671 Epoch 24/100 546/546 [==============================] - 20s 37ms/step - loss: 0.1875 - binary_accuracy: 0.9260 - precision: 0.8849 - recall: 0.8158 - val_loss: 0.5360 - val_binary_accuracy: 0.8647 - val_precision: 0.7722 - val_recall: 0.6675 Epoch 25/100 546/546 [==============================] - 20s 37ms/step - loss: 0.1782 - binary_accuracy: 0.9305 - precision: 0.8928 - recall: 0.8265 - val_loss: 0.4001 - val_binary_accuracy: 0.8487 - val_precision: 0.7212 - val_recall: 0.6654 Epoch 26/100 546/546 [==============================] - 20s 37ms/step - loss: 0.1826 - binary_accuracy: 0.9285 - precision: 0.8915 - recall: 0.8191 - val_loss: 0.3431 - val_binary_accuracy: 0.8698 - val_precision: 0.7674 - val_recall: 0.7039 Epoch 27/100 546/546 [==============================] - 20s 37ms/step - loss: 0.1625 - binary_accuracy: 0.9355 - precision: 0.8986 - recall: 0.8419 - val_loss: 0.3582 - val_binary_accuracy: 0.8689 - val_precision: 0.7750 - val_recall: 0.6868

Takto se tedy trénovalo:

In [49]:

sns.relplot(data=pd.DataFrame(history.history), kind='line', height=4, aspect=4)

plt.show()

Nakonec můžu model vyhodnotit proti testovacím sadám dat pro X, Y a S:

In [50]:

Z_pred = np.around(model05.predict([X_test, Y_test, S_test], verbose=0))

metrics = pd.concat([metrics.drop(model05.name, level=0, errors='ignore'), model_quality_reporting(Z_test, Z_pred, model_name=model05.name)])

precision recall f1-score support

NORM 0.82 0.91 0.86 964

MI 0.93 0.63 0.75 553

STTC 0.78 0.73 0.75 523

CD 0.71 0.72 0.72 498

HYP 0.71 0.49 0.58 263

micro avg 0.80 0.75 0.77 2801

macro avg 0.79 0.69 0.73 2801

weighted avg 0.81 0.75 0.77 2801

samples avg 0.78 0.75 0.75 2801

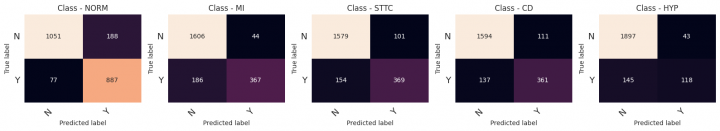

Vyhodnocení všech modelů

Tak si nejdříve ukážeme, jak dopadly metriky u všech zkoušených modelů:

In [51]:

metrics.index.set_names(['model', 'group'], inplace=True)

metrics.reset_index(inplace=True)

Metrika Precision

In [52]:

fig, ax = plt.subplots(figsize=(8, 3)) ax = sns.barplot(metrics, x='group', y='precision', hue='model', ax=ax) sns.move_legend(ax, "upper left", bbox_to_anchor=(1, 1)) plt.show()

Metrika Recall

In [53]:

fig, ax = plt.subplots(figsize=(8, 3)) ax = sns.barplot(metrics, x='group', y='recall', hue='model', ax=ax) sns.move_legend(ax, "upper left", bbox_to_anchor=(1, 1)) plt.show()

Metrika F1-score

In [54]:

fig, ax = plt.subplots(figsize=(8, 3)) ax = sns.barplot(metrics, x='group', y='f1-score', hue='model', ax=ax) sns.move_legend(ax, "upper left", bbox_to_anchor=(1, 1)) plt.show()

Závěrečné hodnocení

O celkovém výsledku si udělejte prosím názor sami.

Z mého pohledu vypadá nejlépe kombinovaný model EKG křivky společně s metadaty, a to především proto, že má nejlepší výsledky u těch nejméně zastoupených klasifikačních skupin.

Žádné názory

Jiří Raška

pracuje na pozici IT architekta. Poslední roky se zaměřuje na integrační a komunikační projekty ve zdravotnictví. Mezi jeho koníčky patří také paragliding a jízda na horském kole.

Nejčtenější články autora

-

Mravenčí kolonií na Nurikabe

Přečteno 33 873×

-

AutoEncoder na ořechy

Přečteno 30 293×

-

Klasifikace EKG křivek - výlet do světa neuronových sítí

Přečteno 27 561×

-

Detekce anomálií v auditních záznamech - časové řady

Přečteno 25 480×

-

Detekce anomálií v auditních záznamech

Přečteno 20 971×

Poslední názory

-

\

Re: Detectron2 – hra na špióny

ke článku Detectron2 – hra na špióny -

\

Re: Detectron2 – najdi medvěda

ke článku Detectron2 – najdi medvěda -

\

Re: Mravenčí kolonií na Nurikabe

ke článku Mravenčí kolonií na Nurikabe -

\

Re: AutoEncoder na ořechy

ke článku AutoEncoder na ořechy -

\

Re: Rozpoznání zápalu plic z RTG snímků - ViT model

ke článku Rozpoznání zápalu plic z RTG snímků - ViT model